- #Airflow docker image for kubernetes full#

- #Airflow docker image for kubernetes code#

- #Airflow docker image for kubernetes free#

#Airflow docker image for kubernetes code#

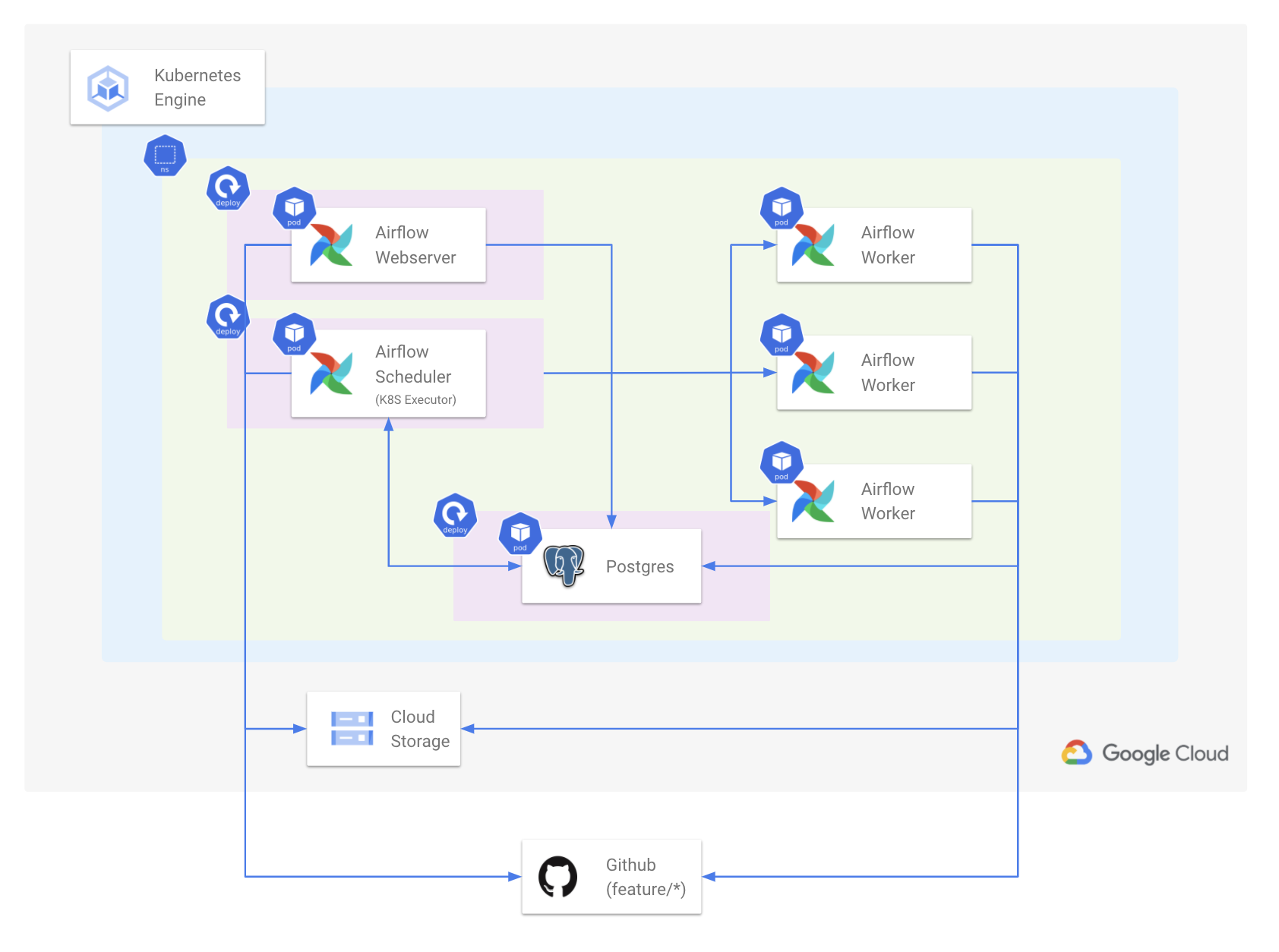

But as of now (after using Airflow with Kubernetes), they can run any task within a docker container using the exact same operator while not worrying about the extra Airflow code to maintain.However, whenever a developer develop a new operator he/she had to build the whole new plugin. Plugin API for Airflow offers great support when engineers want to test the new features that are implemented into their DAGs.Using Airflow with Kubernetes will help with the following: More flexibility when deploying applications: So in order to achieve this developers or Dev-Ops engineers are always looking for ways to decouple pipeline steps and increase monitoring to reduce the risk of future outages and other problems like fire-fights (emergency allocation of resources due to unexpected scenarios like extra stress or load) that might arise. Developers who are using Airflow consistently try to make deployments and ETL (Extract, Transform and Load) pipelines easier to manage.Apart from that it also allows developers to develop their own connectors for various requirements. So the Airflow already has operators for different frameworks like BigQuery, Apache Spark, Hive etc.

#Airflow docker image for kubernetes full#

After setting up the pods users that are using airflow will now have full control over resources, run-environments and secrets making airflow more powerful and allowing users to do any job they want to do using airflow workflows.Kubernetes solve this problem by allowing users to create and launch different Kubernetes configurations and pods.So the difference in purposes leads to dependency management problems because both the teams would be obviously using different libraries as the use case is different. But a company usually have multiple workflows for different purposes like data science pipelines, production applications etc.But there’s one drawback is that Apache airflow users must use the clients and frameworks provided by airflow worker when doing the execution.Apart from that the feature of its plug-in framework makes the extensibility very simple. It provides integration support for various services used by several cloud providers and other platforms like Spark. From the begining, Airflow is known for its flexibility.For more information on Airflow Operators please read this blog.

Operators are kind of a placeholders in this case that help us to define what operations we want to perform.

So to do actual operations we need operators.

#Airflow docker image for kubernetes free#

Airflow is a free to use and open-source workflow orchestration framework developed by Apache that is used to manage workflows.

0 kommentar(er)

0 kommentar(er)